Disease-driven domain generalization for neuroimaging-based assessment of Alzheimer’s disease.

Abstract

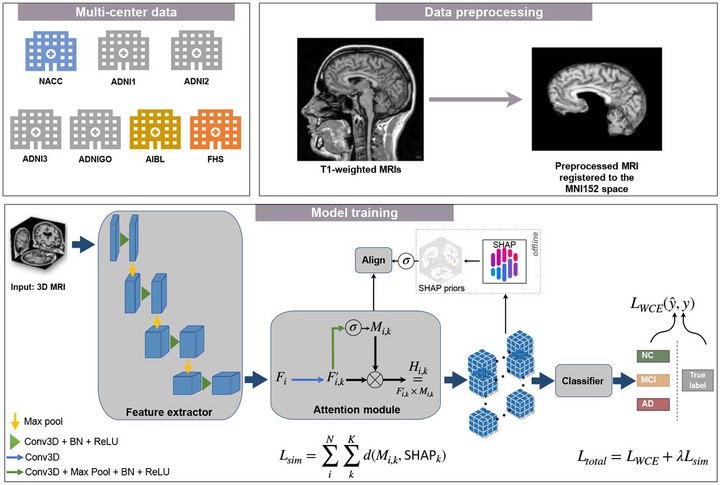

Development of deep learning models to assess the degree of cognitive impairment on magnetic resonance imaging (MRI) scans has high translational significance. Performance of such models is often affected by potential variabilities stemming from independent protocols for data generation, imaging equipment, radiology artifacts, and demographic distributional shifts. Domain generalization (DG) frameworks have the potential to overcome these issues by learning signal from one or more source domains that can be transferable to unseen target domains. We developed an approach that leverages model interpretability as a means to improve generalizability of classification models across multiple cohorts. Using MRI scans and clinical diagnosis obtained from four independent cohorts (Alzheimer’s Disease Neuroimaging Initiative (ADNI, n = 1, 821), the Framingham Heart Study (FHS, n = 304), the Australian Imaging Biomarkers and Lifestyle Study of Ageing (AIBL, n = 661), and the National Alzheimer’s Coordinating Center (NACC, n = 4, 647)), we trained a deep neural network that used model-identified regions of disease relevance to inform model training. We trained a classifier to distinguish persons with normal cognition (NC) from those with mild cognitive impairment (MCI) and Alzheimer’s disease (AD) by aligning class-wise attention with a unified visual saliency prior computed offline per class over all training data. Our proposed method competes with state-of-the-art methods with improved correlation with postmortem histology, thus grounding our findings with gold standard evidence and paving a way towards validating DG frameworks.